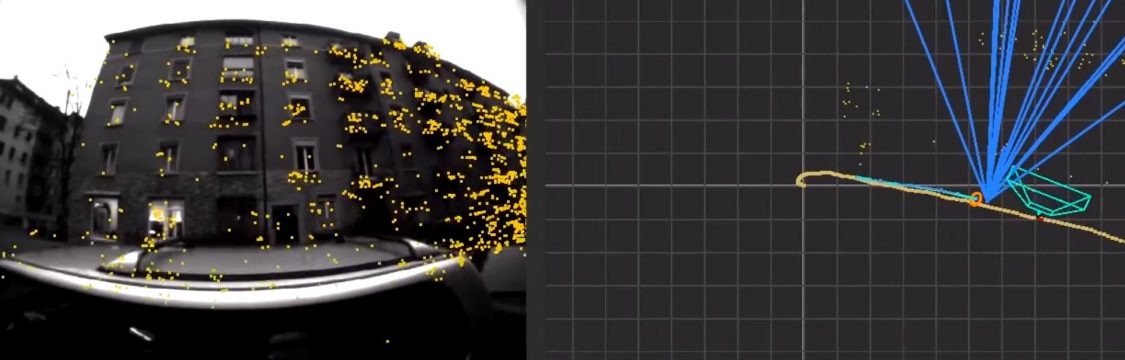

This project entitled “Large-Scale, Real-Time, Visual-Inertial Localization” is interesting, using Google’s experimental ‘Tango’ hardware to improve real-time tracking of location and position.

The hardware is a tablet computer with a motion tracking camera, a 4 megapixel 2µp pixel camera, integrated depth sensing and a high-performance processor. This equipment aids in tasks like scanning rooms. A limited number of kits were produced and given or sold to professional developers with the intent of making technological developments.

One day we may see more accurate and interesting augmented reality. I’ve often thought overlaying information onto our current reality would be interesting. Walking down a street and seeing for-sale signs could be interesting. It may just being overloaded in advertising, making a virtual eyesore though.

Source:

Get Out of My Lab: Large-scale, Real-Time Visual-Inertial Localization

Simon Lynen, Torsten Sattler, Michael Bosse, Joel Hesch, Marc Pollefeys and Roland Siegwart.

Autonomous Systems Lab, ETH Zurich

Computer Vision and Geometry Group, Department of Computer Science, ETH Zurich

http://www.roboticsproceedings.org/rss11/p37.pdf