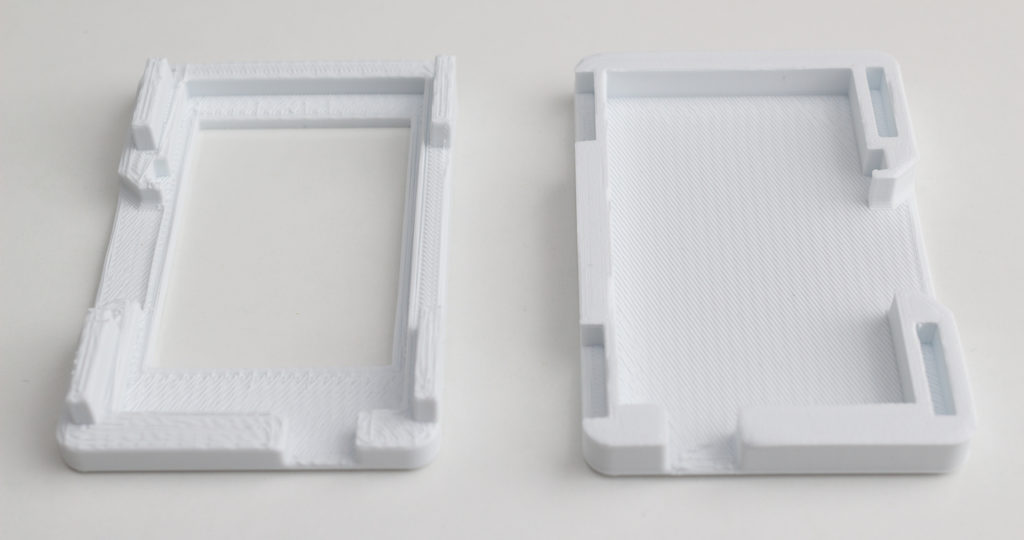

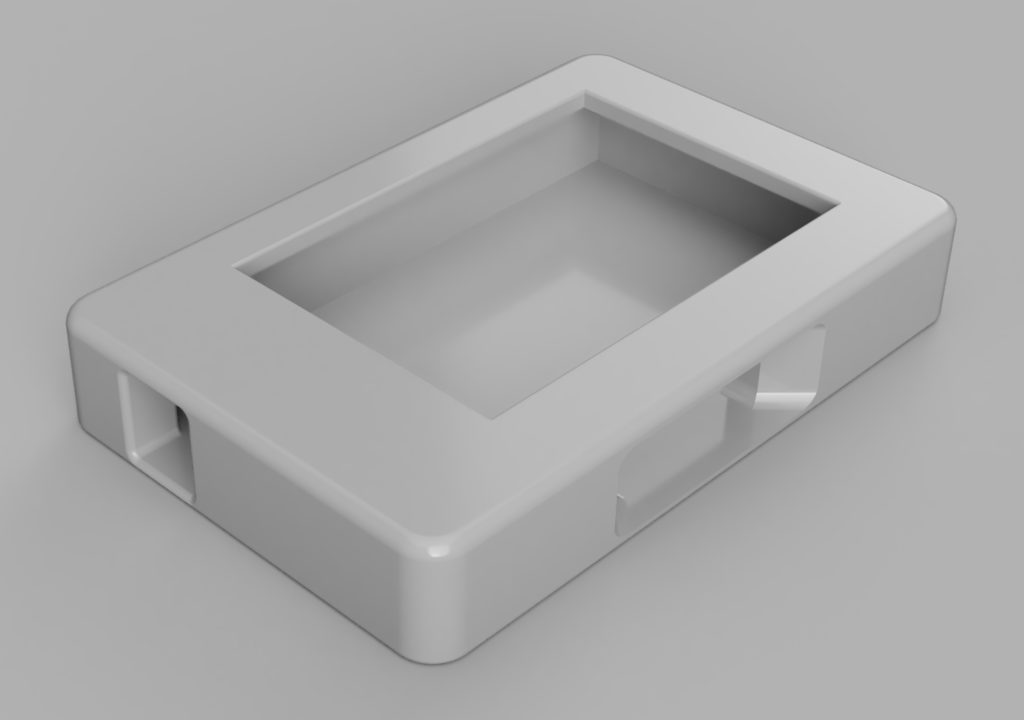

I created a case for my ESP32 E‑Paper Status Display using Fusion 360, Cura and a 3D printer.

It is available to download at Thingiverse.

I created a case for my ESP32 E‑Paper Status Display using Fusion 360, Cura and a 3D printer.

It is available to download at Thingiverse.

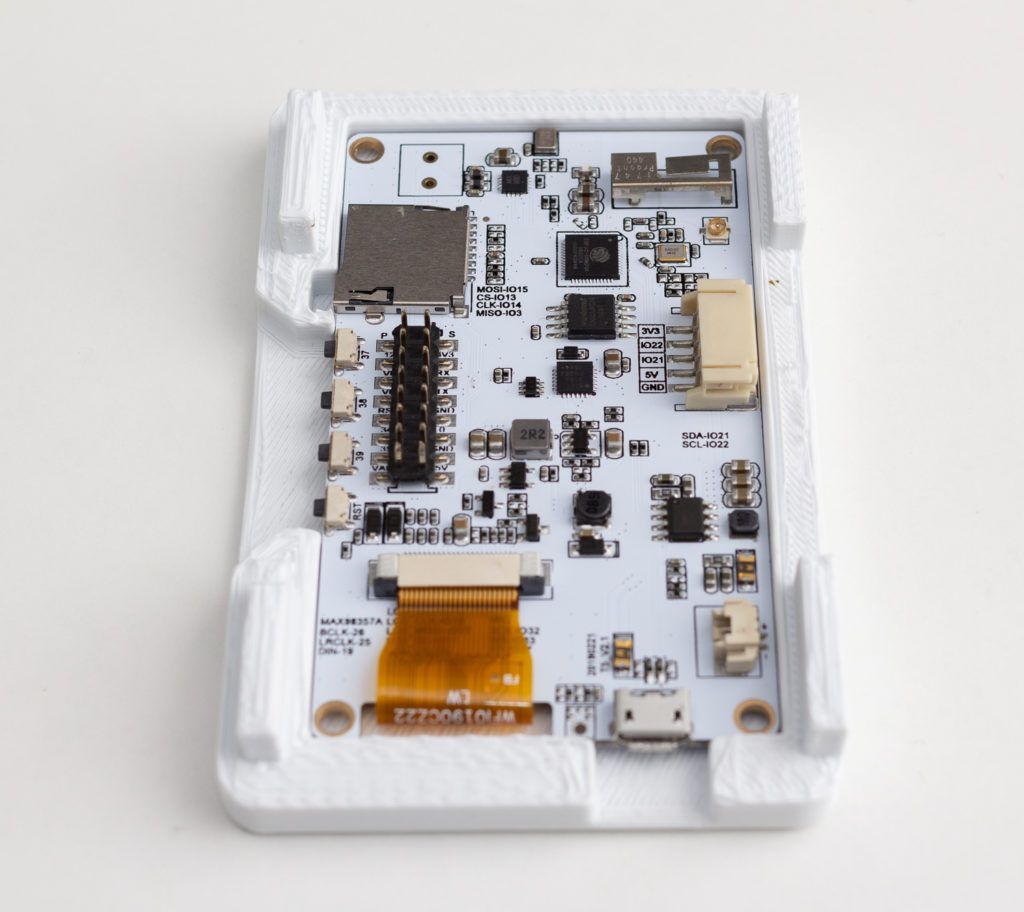

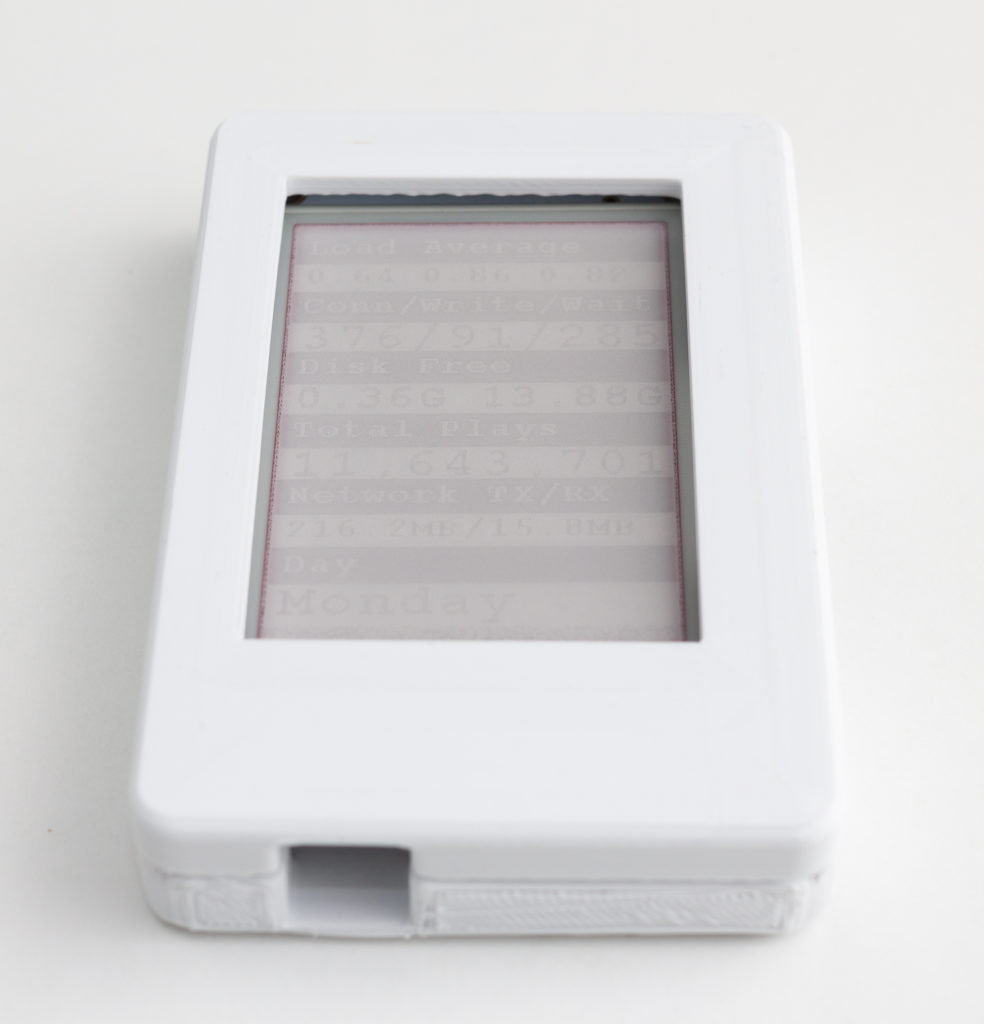

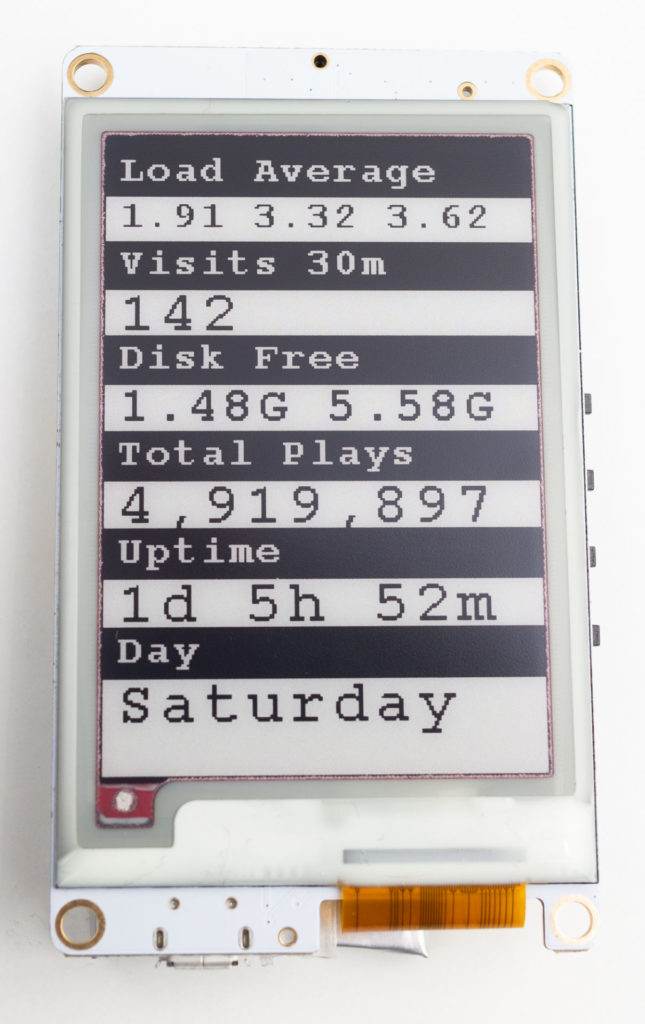

Using an ESP-32 board with an embedded E‑Paper display, I created a gadget that shows status information from my web server.

E‑Paper, also known as E‑Ink, only needs power when being updated, and uses no power between updates. This means that the gadget can be powered for weeks from a rechargeable battery.

The purpose of this gadget is to put on my wall or desk, and show regularly updated important information on my web server, to keep informed of web site problems and statistics. The information displayed can be easily changed, for example to the latest weather, news, currency prices or anything that can be accessed via the internet. E‑Paper means it uses a very small amount of power and heat, compared to a computer display or television.

You can view my code on GitHub if you are interested in making your own.

Using my DJI Spark drone and taking around 40 photographs using its built-in camera, I was able to make a 3D reconstruction of where I live:

Many people in the UK may be familiar with seeing groups of people, or sometimes a parked vechicle, clearly displaying a sign saying ‘Traffic Survey.’ These people are employed to keep a tally of the number of vehicles using a road, and the types of vehicles. This information is important for planning infrastructure, helping more efficient provision of transport capacity for cars, trucks/lorries and buses.

Many will also have seen temporary pressure sensors across roads, linked to a data collection box attached to a street lamp. This system also provides useful data on the number of vehicles using a road.

By using two pressure sensors, its possible to fairly accurately record the numbers of vehicles passing in two directions. However, there may be some inaccuracies when vehicles pass simultaneously or almost simultaneously. A 15-minute time period may have an inaccuracy of 10% (http://www.windmill.co.uk/vehicle-sensing.html). For roads with more than two lanes, accuracy would be even less and the system probably wouldn’t be feasible.

There are also electronic solutions dug into roads, which I’ve noticed on approaches to traffic lights. These may be used to alter timings of traffic lights depending on traffic, and it seems they could also be used for traffic survey data collection. You may have noticed traffic lights changing as you approach them, and a system like this may be detecting the mechanical energy of your vehicle passing over sensors. I expect there are also magnetic versions of these sensors, that sense the metallic body of a vehicle. Sometimes motorcycles in the US do not trigger these systems at automated intersections, causing problems, and some state laws allowing vehicles to pass if a sensor fails to detect a vehicle’s presence.

I’ve noticed what seems like a new technology recently, with a video camera mounted on a street light, and a data collection box attached to it. After researching it, these are video cameras that record conventional HD video for a period of 3 to 7 days. The model I saw in use also has advanced features like remote management and event alerts sent via mobile cellular networks (LTE), allowing settings to be changed and notifications of problems without having to travel back to where the unit is deployed.

I expect computer vision techniques (e.g. OpenCV) are later used to analyse the numbers and types of vehicles passing:

I expect there are strict rules in place to prevent ANPR (Automatic Number Plate Recognition) being used, as this may violate the privacy of drivers. However, if there are not, I expect travel time surveys could be made by calculating how long a commute takes for individual drivers, and how they change over time. Perhaps if this was calculated anonymously, it would be a usable technique.

While there have been companies that have monitored cellphones with Bluetooth and Wi-Fi serial numbers, often the general public have expressed concerns over privacy. An example hardware provider for this is http://www.libelium.com.

In conclusion, I found it interesting to research what these cameras and other equipment I see are used for, and expect it is an interesting field analysing queues of videos for traffic data.

My Sony smartphone has an unusual TRRRS (Tip-Ring-Ring-Ring-Seal) connector, allowing it to use very reasonably priced noise cancelling headphones (Sony MDR-NC31EM) that have an extra microphone in each earphone.

I found that the Sony app Sound Recorder allows selecting recording directly from these two microphones, and are great for binaural recording, and I gave it a go walking along a few busy streets. You can listen on YouTube and Soundcloud:

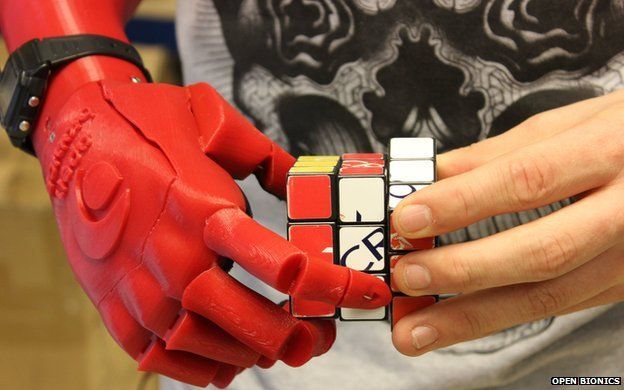

“A prototype 3D-printed robotic hand that can be made faster and more cheaply than current alternatives is this year’s UK winner of the James Dyson Award.” (BBC News link)

This is a fantastic idea, which has so much value to people without limbs. Bionic prosthetics can cost up to £100,000, and £30,000 for a single hand.

The 3D-printed robotic hand in the article costs £2,000, which is the same price as a prosthetic hook, and offers similar functionality to the top-of-the-range options.

The designer gets to develop his interest in creating a product, while helping the estimated 11 million people who are hand amputees worldwide.

This project entitled “Large-Scale, Real-Time, Visual-Inertial Localization” is interesting, using Google’s experimental ‘Tango’ hardware to improve real-time tracking of location and position.

The hardware is a tablet computer with a motion tracking camera, a 4 megapixel 2µp pixel camera, integrated depth sensing and a high-performance processor. This equipment aids in tasks like scanning rooms. A limited number of kits were produced and given or sold to professional developers with the intent of making technological developments.

One day we may see more accurate and interesting augmented reality. I’ve often thought overlaying information onto our current reality would be interesting. Walking down a street and seeing for-sale signs could be interesting. It may just being overloaded in advertising, making a virtual eyesore though.

Source:

Get Out of My Lab: Large-scale, Real-Time Visual-Inertial Localization

Simon Lynen, Torsten Sattler, Michael Bosse, Joel Hesch, Marc Pollefeys and Roland Siegwart.

Autonomous Systems Lab, ETH Zurich

Computer Vision and Geometry Group, Department of Computer Science, ETH Zurich

http://www.roboticsproceedings.org/rss11/p37.pdf