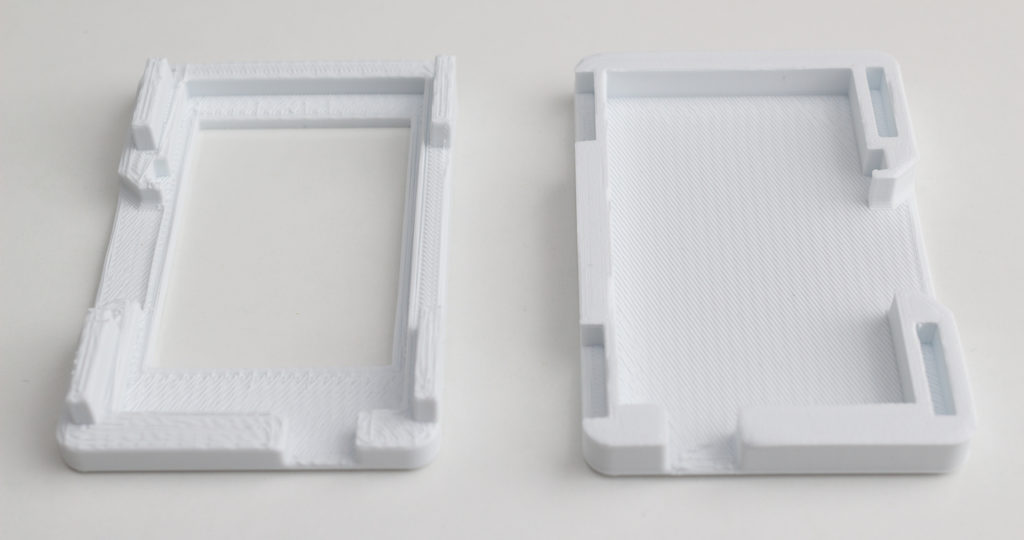

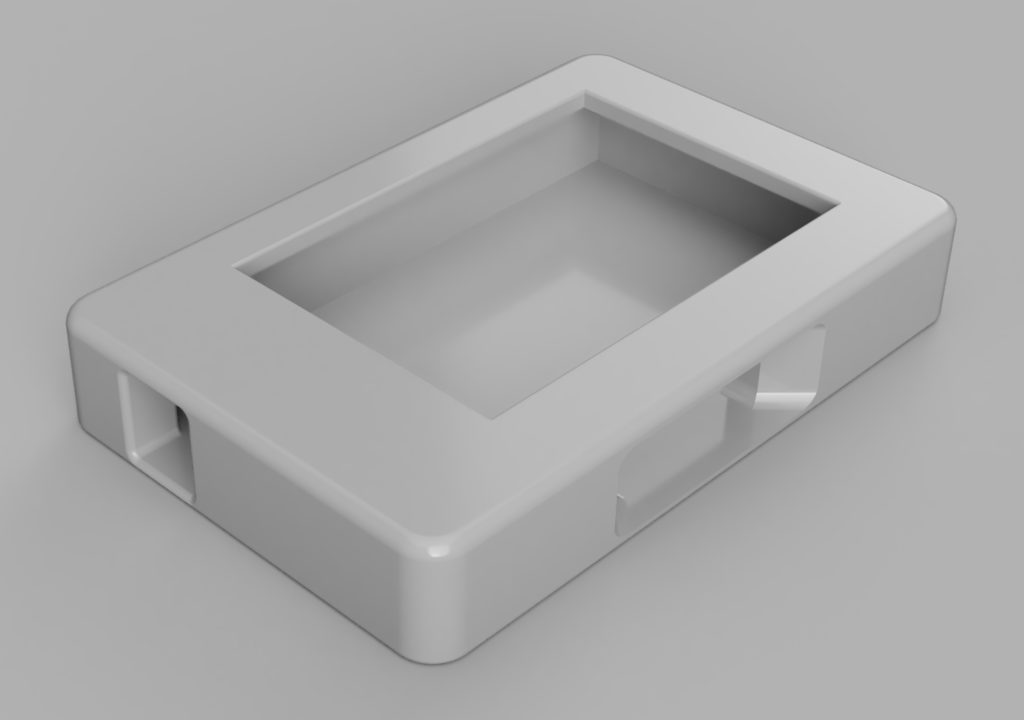

I created a case for my ESP32 E‑Paper Status Display using Fusion 360, Cura and a 3D printer.

It is available to download at Thingiverse.

I created a case for my ESP32 E‑Paper Status Display using Fusion 360, Cura and a 3D printer.

It is available to download at Thingiverse.

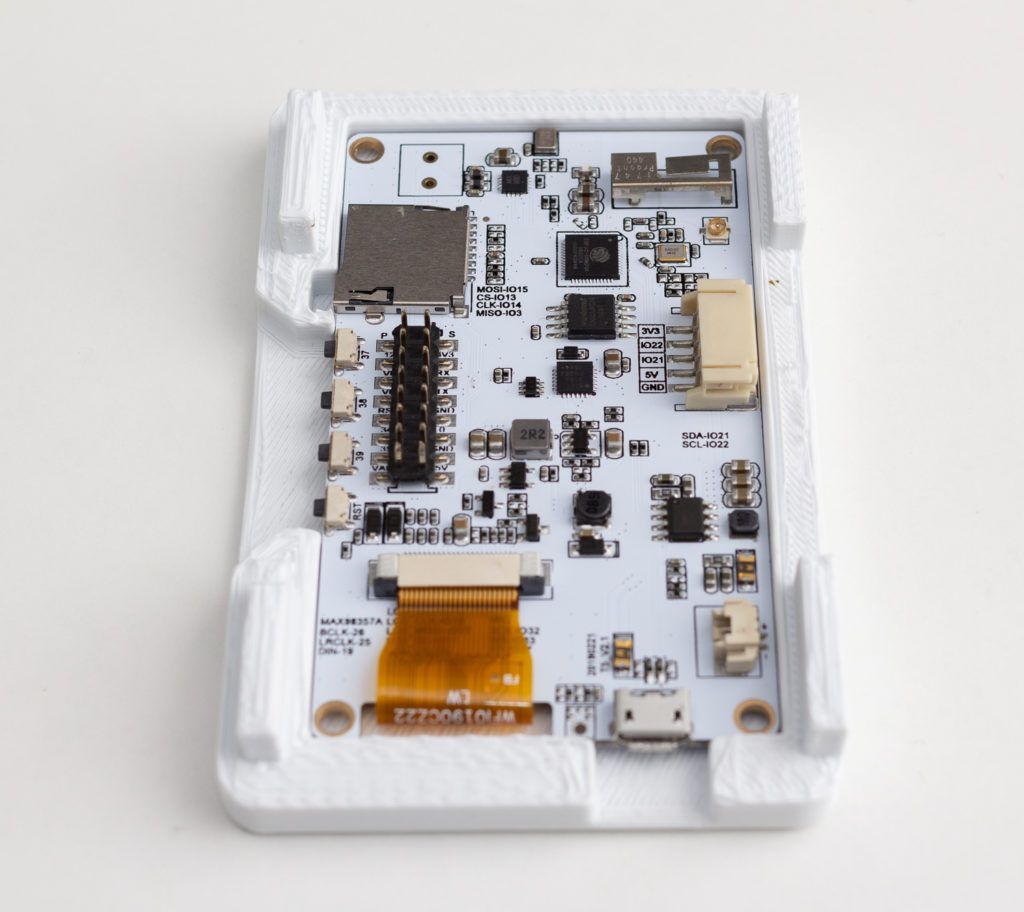

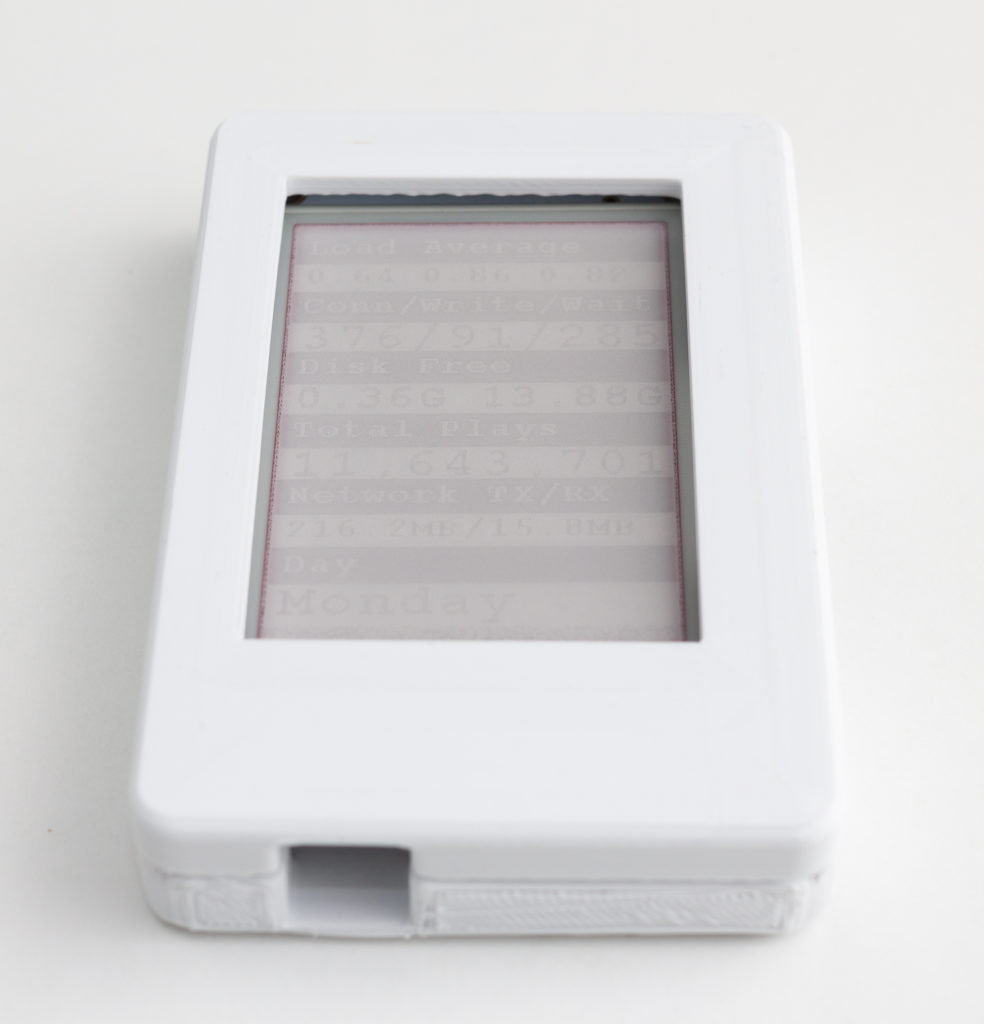

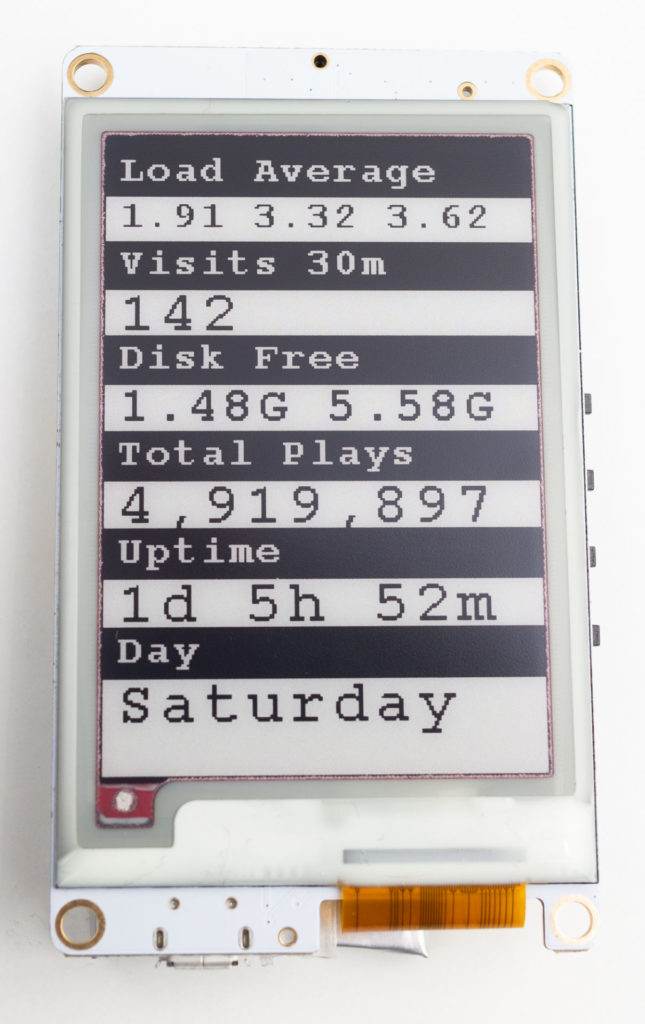

Using an ESP-32 board with an embedded E‑Paper display, I created a gadget that shows status information from my web server.

E‑Paper, also known as E‑Ink, only needs power when being updated, and uses no power between updates. This means that the gadget can be powered for weeks from a rechargeable battery.

The purpose of this gadget is to put on my wall or desk, and show regularly updated important information on my web server, to keep informed of web site problems and statistics. The information displayed can be easily changed, for example to the latest weather, news, currency prices or anything that can be accessed via the internet. E‑Paper means it uses a very small amount of power and heat, compared to a computer display or television.

You can view my code on GitHub if you are interested in making your own.

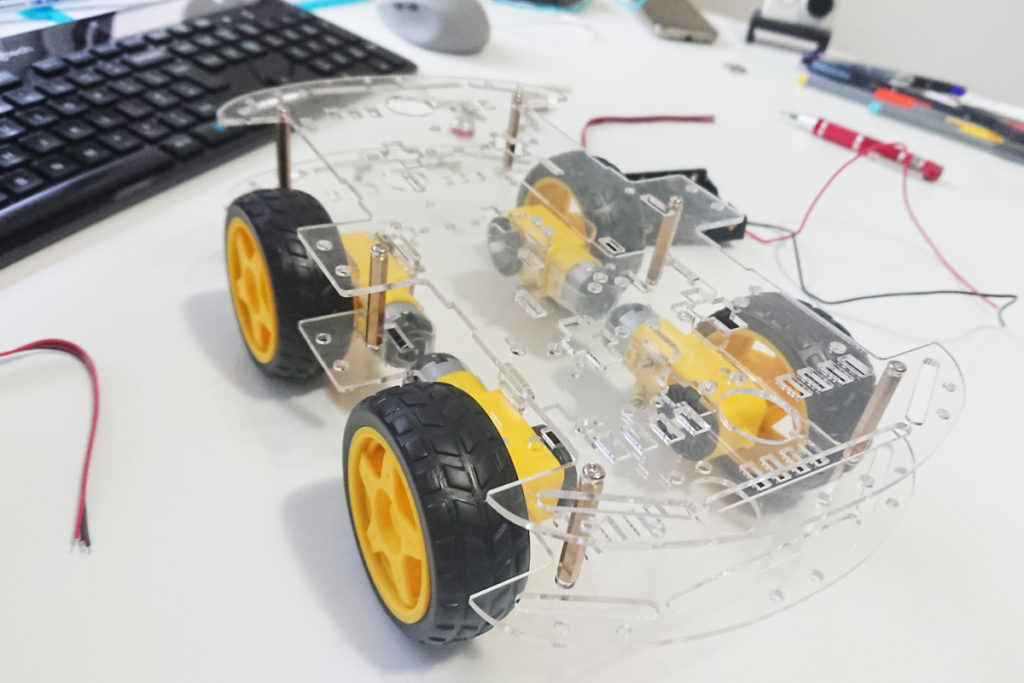

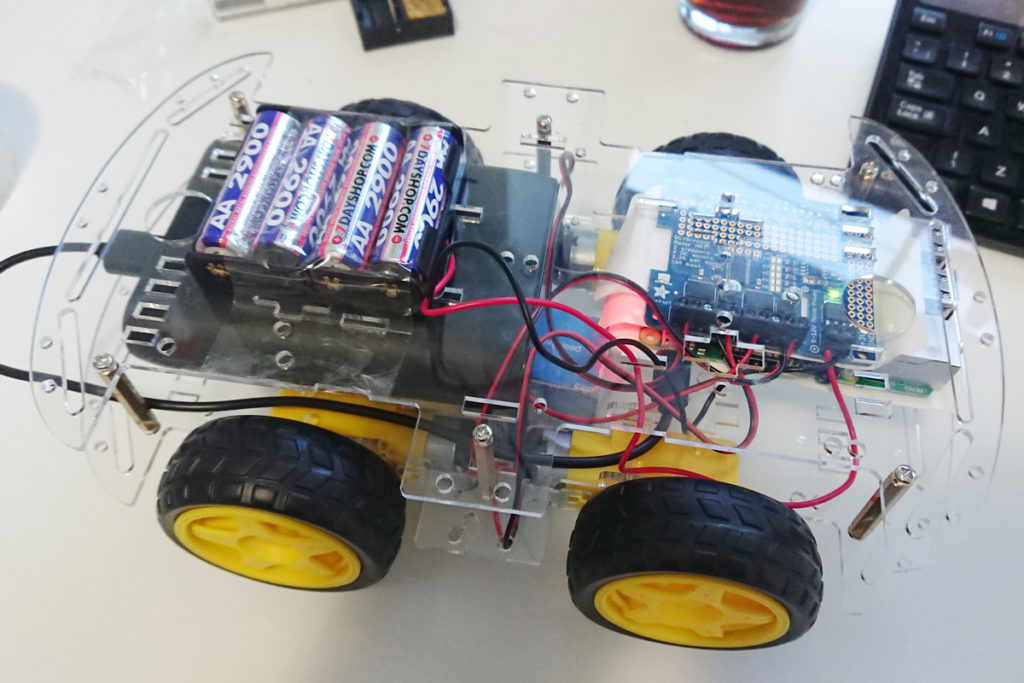

For a long time I have wanted to build a remote controlled robot car capable of being controlled via the Internet, at long ranges using 4G/LTE cellular connectivity. So I did.

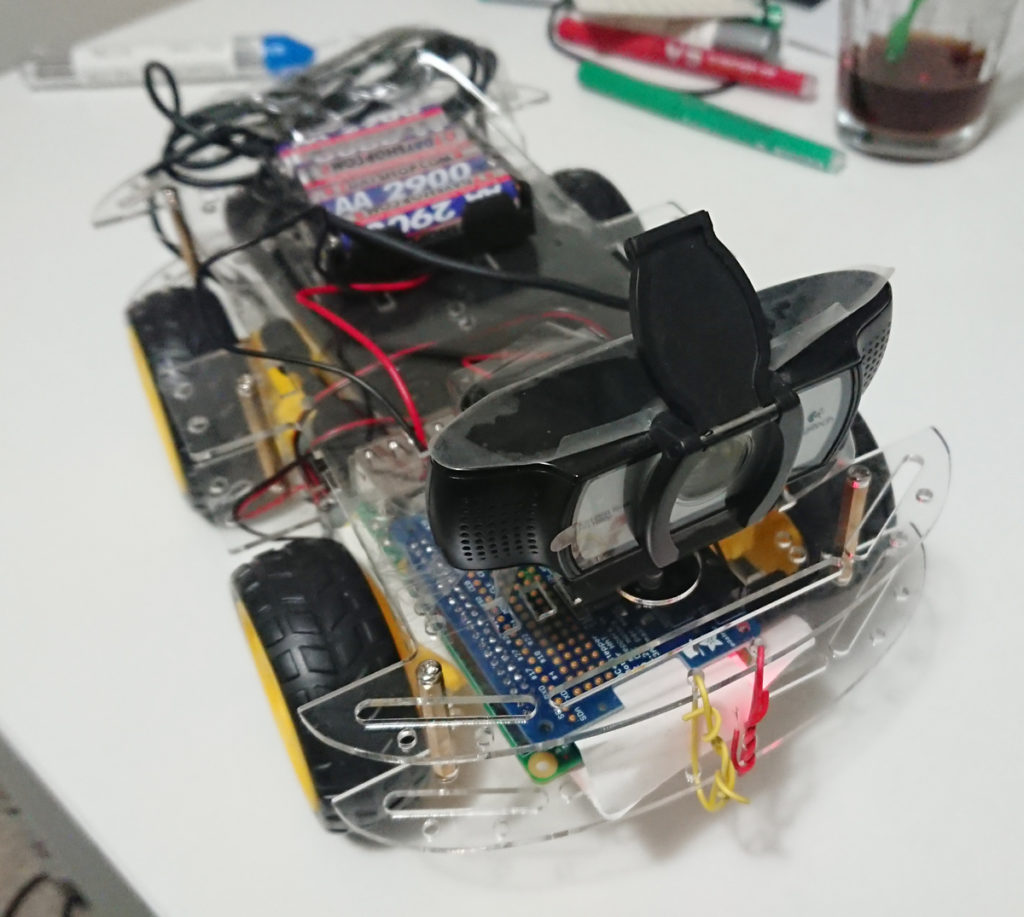

I used a Raspberry Pi 3B+, an Adafruit DC and Stepper Motor Hat, and a Logitech C930e USB UVC webcam.

The robot is capable of connecting to the Internet using Wi-Fi. I was able to slightly increase the effective Wi-Fi range by using a Mikrotik router and altering the hardware retries setting and frame life settings. The intention was to quickly recover from transmission errors and avoid congestion. This discarded video packets that could not be delivered in real time, and kept the network clear for when transmission would be successful. I also used iptables and mangle to alter the DSCP of the live video stream packets with the same intention.

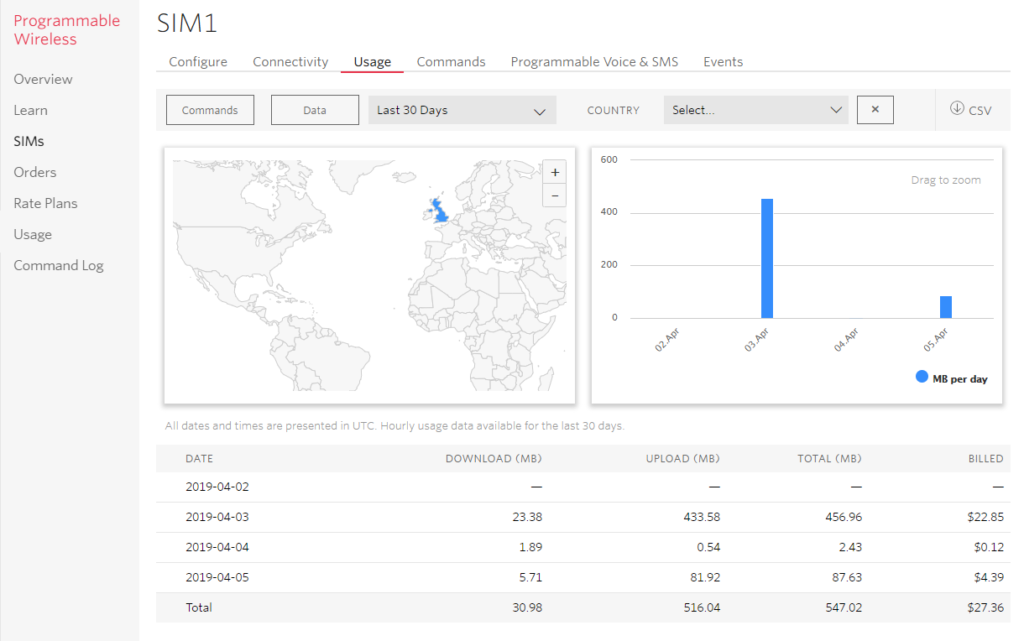

To enable a long range connection, I used Twilio Programmable Wireless to connect to local 4G/LTE cellular networks. I substantially lowered the data rate to around 250 Kbps to make transmission more reliable and reduce costs, and was able to get a virtually flawless live feed.

The live video and audio stream uses FFMPEG for compression and streaming, and has a plethora of settings to tune. I took the time to tune bitrate, buffering, keyframe interval. I also ensured the web camera was able to natively encode video with UVC at the selected resolution to reduce the load on the Raspberry Pi’s CPU. Video latency was often under a second, which is impressive especially considering the round trip involved.

The control system uses Let’s Robot (now Remo.tv), based at Circuit Launch in California, which has a community of robot builders who love to create and share their devices. The programming language of choice is Python, and I also linked to an existing API I had created in JavaScript with Node and PM2.

The first 4G/LTE long range mission was successful, and the webcam was good enough to be used at night. Different members of the community took turns to drive the robot. It didn’t always drive straight, so we had to drive forward and turn to the left at regular intervals. The robot drove for around 30 minutes, and then got stuck when it fell down a sidewalk. I had to quickly drive to retrieve it =)

The second mission was intended to drive from my location to a friend working at a local business. However half way through the mission, a suspicious member of the public grabbed the robot, threw it in a trash can, and called the police. I waited for the police and calmly explained that the robot was an educational project in telepresence, and also told the person reporting the robot that there were no hard feelings, despite interfering and damaging my personal property.

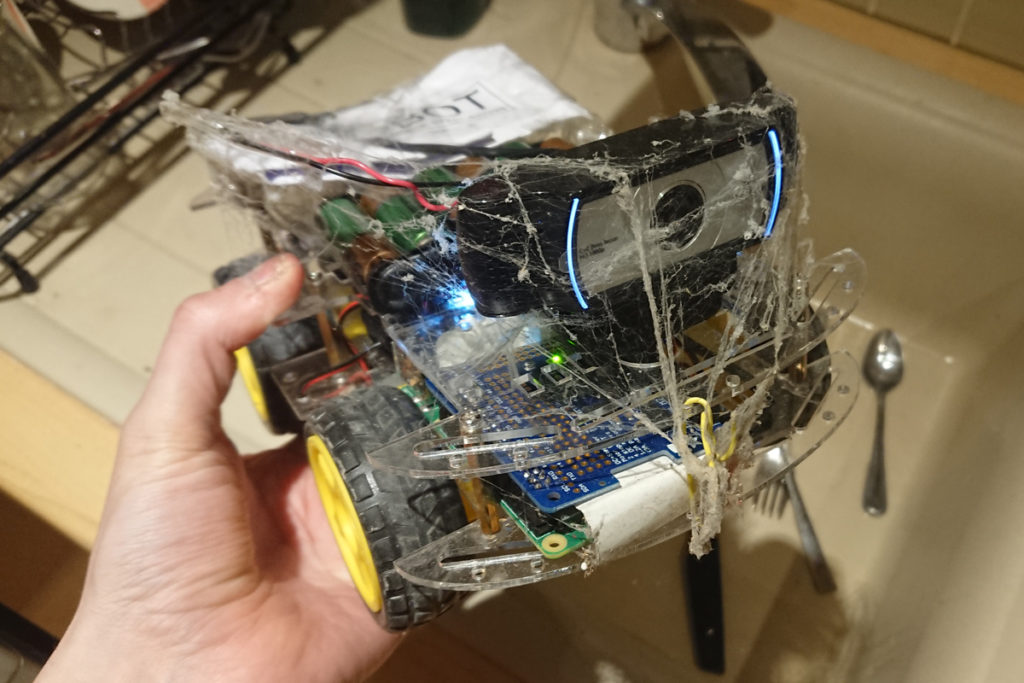

As part of the community site, it is common to leave your robot open to be controlled. While unattended, a sneaky individual drove my robot into a void of the house and managed to get it covered in spider webs and other filth, as you can see below. Thanks.

I found that cats were very curious about the robot invading their territory, as you can see below:

I was very pleased with how the project worked, and had the opportunity to use Python, Node, and fine-tune wireless networking and live video streaming, and of course remotely control the robot as I had wanted to do for a long time.

If you want to build your own robot, the guide to ‘building a Bottington’ is a great place to start.

Update: Twilio saw this post and gave me a $20.00 credit. Thank you 😁

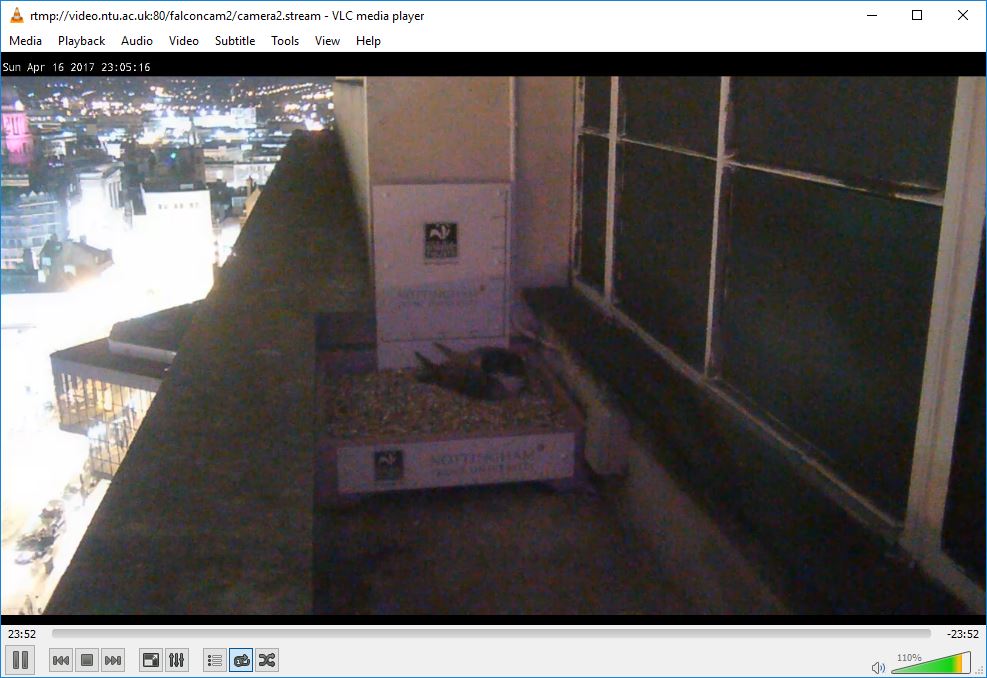

I’ve noticed many people having problems watching the cameras, as the official web site still uses the now defunct Flash, so I wrote this short guide on a way to play the live stream from the cameras:

https://www.jonhassall.com/downloads/ntufalconcams.m3u

https://www.jonhassall.com/downloads/ntufalconcams.m3u

Hopefully in the future NTU will either stream directly to a HTML5 compatible format, or set up a live streaming conversion server.

Hope this helps people enjoy watching the falcons.

Using NodeJS and Express, I created a web interface to remotely control the pen drawing robot ‘Line-Us.’ Available from Cool Components.

You can watch a video of it functioning here:

Using my DJI Spark drone and taking around 40 photographs using its built-in camera, I was able to make a 3D reconstruction of where I live:

Videos from when I had a drone back in 2017:

See also the 3D model I created from my drone photographs using photogrammetry and a lot of patience:

Aerial 3D Model Created with Drone Through Photogrammetry